OpenAI, as if trying to break its own record for the most confusing product lineup in history, has released two new AI models for ChatGPT: OpenAI o3 and OpenAI o4-mini.

These join GPT-4.5, which is still in testing, and GPT-4o, the default option for ChatGPT users. Naturally, I wanted to see how they would perform against each other.

But, while there are all kinds of stress tests for AI to see the very limit of what they can do, I’m more interested in how they perform under more normal circumstances. Would it matter to an average person who uses AI which model ChatGPT turned to for their occasional trivia question or funny photo?

I designed four distinct prompts: one focused on visual logic, one on visual creativity, one on linguistics and translation, and one on poetry. Then I ran each prompt through o3, o4-mini, GPT-4o, and GPT-4.5 and watched how they handled it.

Before looking at the results, it’s worth noting what each model is supposedly best at, according to OpenAI at least. The new o3 model is supposed to be the genius among geniuses, with exceptional reasoning capabilities and a knack for interpreting images. It’s still under the GPT-4 family umbrella, but OpenAI says it performs better than its siblings on many fronts. The o4-mini model is the budget-friendly alternative. It’s faster and cheaper, if slightly less powerful.

GPT-4.5 is supposedly the most capable model OpenAI has ever built in the broadest sense. It’s supposedly more thoughtful, better at understanding context, at thinking in longer terms, and at generally combining logic and empathy.

For most people, the ChatGPT model they’ll deal with is GPT-4o. OpenAI’s first natively multimodal model can do it all and do it well, even if it lacks the logical or emotional flourishes of its newer siblings.

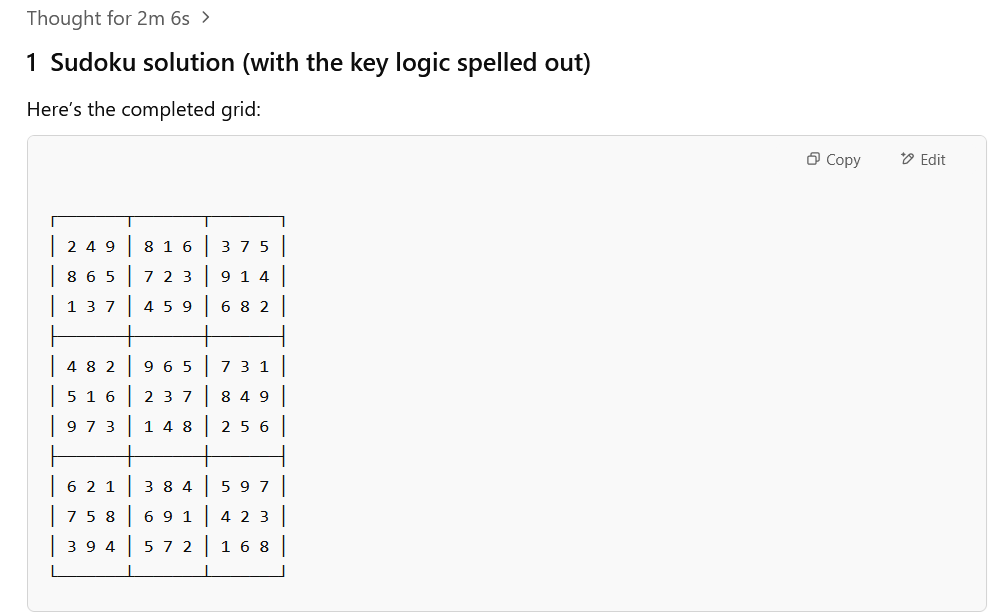

Sudoku

I started with a test of the visual reasoning that the new models claim to be so adept at. I decided to combine it with some logic testing that even I could understand: a Sudoku puzzle.

I also wanted them to explain their answer, as otherwise, it’s not much of an AI assistant – just a machine for solving Sudoku. I wanted them not just to dump an answer, but walk through the logic. I uploaded the same image to each model and asked: “Here’s a photo of a Sudoku puzzle. Can you solve it and explain your reasoning step by step?”

The answer was yes for all of them. The o3 and o4-mini versions showed their thinking before going through the answer, but all of them got it right. What was more interesting was the brevity of the o4-mini and the very mathematical reasoning in both new models.

While the 4o and 4.5 were more conversational in explaining why “you can’t put any other number here,” rather than showing an actual equation. As a further test, I put a deliberately impossible Sudoku sheet to the same test. They all spotted the issue, but where they all simply walked through the problems, GPT-4o, for some reason, wrote out an ‘answer’ sheet that just had a lot of zeroes on it.

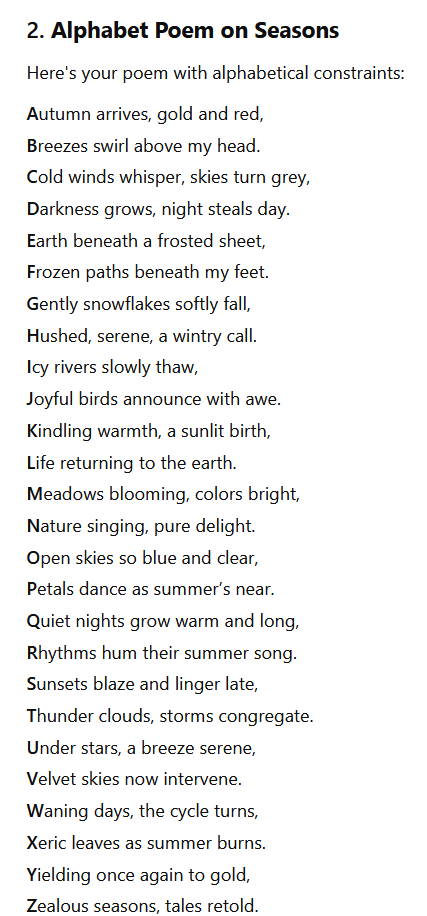

Poetry

This one was meant to test creativity, with some limitations to season it with logic. I asked the models to: “Write a short poem about the changing seasons, but each line must start with the next letter of the alphabet, beginning with ‘A’.”

This kind of prompt forces a model to strike a balance between structure and imagination. You need creativity to describe the seasons, and discipline to follow the alphabetical format. While they all followed the format, o3 stood out for being the only one that didn’t rhyme.

All of the other managed to stick to the brief, with greater or lesser artistic ability, and all had a mix of couplets and four-line rhymes. They were all a little bland, good for a greeting card perhaps, but hardly Dickinson. Still, the GPT-4.5 poem was charming and worth sharing as I did above.

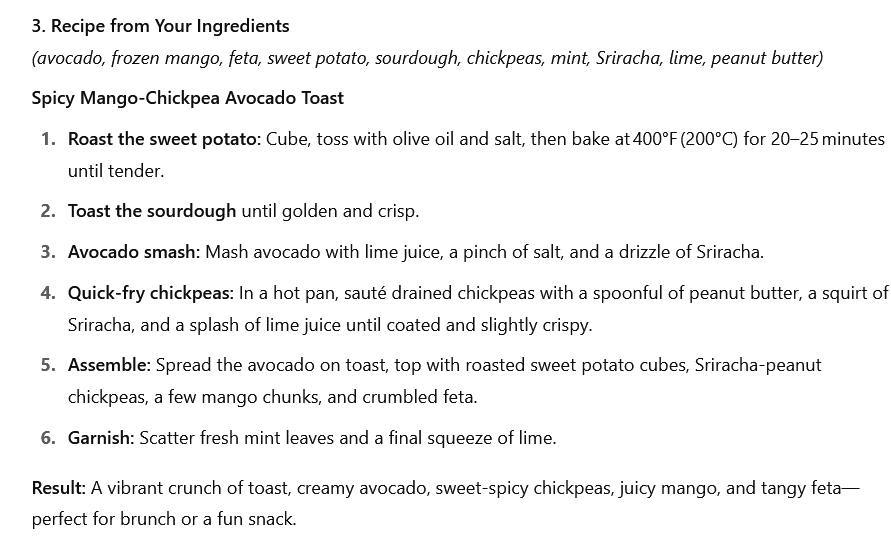

What can I cook?

For this test, I gathered a bunch of random ingredients and took a picture of them, then uploaded the image, which included an avocado, frozen mango chunks, feta, a sweet potato, sourdough bread, chickpeas, mint, Sriracha, lime, and peanut butter.

Why that mix? For no reason other than to see what would happen when I told the AI models: “Here’s a picture of the ingredients I have. What can I cook with them?”

o3 was very practical with a suggestion of “Spicy Sweet‑Potato & Chickpea Toasts with Avocado‑Mango Smash and Peanut‑Sriracha Drizzle.” It broke down the different components into a table with the ingredients and recipe for each, and even a bullet point list of reasons why it would taste good.

The o4-mini recipe, which you can see above, for “Spicy Mango‑Chickpea Avocado Toast,” was straightforward with the instructions and a nice description of the “result” of the recipe. GPT-4o had a similar idea with “Sweet and Spicy Avocado-Chickpea Toast,” but, surprisingly for the otherwise conversational model, it was a very brief guide, even shorter than the o4-mini.

Perhaps not surprisingly, GPT-4.5 came out with a full menu of dishes, including “Avocado & Chickpea Toast with Mango Salsa,” “Sweet Potato & Tofu Buddha Bowl,” “Spicy Mango-Peanut Tofu Wrap,” “Thai-Style Sweet Potato & Chickpea Soup,: and “Refreshing Mango-Mint Sorbet.”

Further, each had a description and discussion of the taste and style. I’m genuinely eager to make the sorbet. Since it’s just a blend of frozen mango cubes with fresh mint, a squeeze of lime, and a spoonful of peanut butter to make it creamy, you then freeze and serve it with mint leaves and lime zest.

Rain Translate

The last test was all about nuance. I asked the AI models to: “Translate the phrase ‘It’s raining cats and dogs’ into Japanese, ensuring the meaning is preserved culturally.”

Literal translations of idioms rarely work. What I was looking for was an understanding of not just the words, but the context. This was mostly a reminder of how far even the baseline ChatGPT models have come. They all came back with variations on the same answer: that there isn’t an exact translation, but the closest is to say it’s raining like someone has overturned a bucket.

GPT-4.5 did give me the literal translation, while also explaining why it wouldn’t make sense in Japanese to say it. Personally, I just enjoyed the extreme emoji usage of GPT-4o, which felt, for some reason, that it had to translate the phrase into those little pictures too.

Model mania

I will say that none of the models performed poorly. Each definitely had their own quirks and emphasized different things. o3 is the most analytical and precise, o4-mini had the same approach but was a little faster. GPT-4.5 definitely took pains to mimic human responses the most, and GPT-4o just loves emojis.

At the more extreme levels of testing or complex prompts, I’m sure each model stands out as very different from the others. But, for basic, non-business or software code-centric prompts, you can’t really go wrong with any of them. If I’m in the kitchen, though, I may defer to GPT-4.5, at least if the sorbet turns out as good as it promises.