Docker has transformed how we build, ship, and run applications. It has quickly become a crucial tool for developers and operations teams worldwide. Its power lies in simplifying deployment and creating consistent environments. However, like many popular tools, it’s surrounded by a number of misconceptions and half-truths. Frankly, some of these Docker myths have been repeated so often that they’ve become background noise I’m tired of hearing.

This post aims to cut through that noise to set the record straight and help you leverage Docker more efficiently.

Related

I used Docker for the first time and regret not discovering it earlier

Where was it all this time?

5

Docker is useful for developers only

Calling Docker a tool just for developers misses the bigger picture. While developers definitely love it for creating consistent coding environments and easily managing project dependencies, its real power unfolds across the entire software creation pipeline.

For operations teams managing the servers, Docker simplifies deploying applications, ensures they run consistently everywhere, makes updates and rollbacks much smoother. For operations teams managing the servers, Docker simplifies deploying applications, ensures they run consistently everywhere, and makes updates and rollbacks much smoother.

So, although Docker benefits start with developers, it improves the process for the entire tech team (and even general users who love to play with such tools).

4

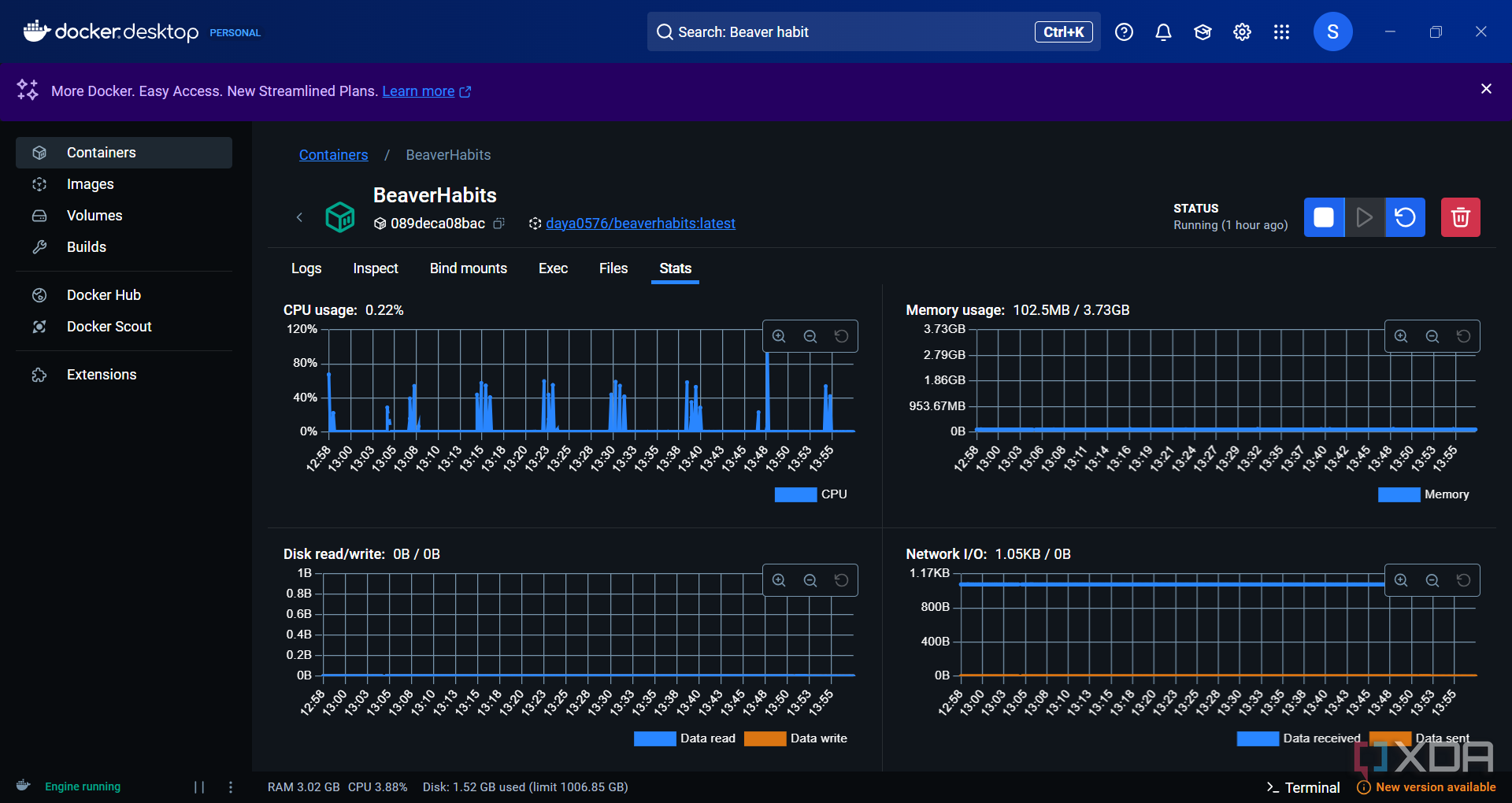

Docker containers are just lightweight virtual machines

Let’s break down why thinking of Docker containers as just lightweight Virtual Machines (VMs) isn’t quite right. Each VM runs a full guest operating system, including its own kernel, on top of a hypervisor. Although it provides strong isolation, a VM consumes significant resources (CPU, RAM, disk space) and takes longer to boot.

Containers use OS-level virtualization. They run directly on top of the host operating system’s kernel, managed by a container runtime like Docker Engine. That’s why they are much faster to start/stop, have significantly lower overhead (less RAM/CPU usage), and higher density (more containers than VMs on the same hardware).

Containers provide process-level isolation. Processes in one container cannot directly see or interfere with processes in another container. Starting a container is typically near-instantaneous. It’s essentially just starting a new process on the host OS.

VMs are suitable when you need to run entirely different operating systems and need the absolute strongest security isolation. In conclusion, while both VMs and containers provide isolated environments, they achieve it through different approaches.

Related

7 Docker containers you should run on your Synology NAS

Turn your Synology NAS into a Docker hub.

3

Docker solves all scaling problems

This is a significant overstatement that confuses many. While Docker plays a crucial role in modern scalable architectures, simply containerizing an application doesn’t magically handle a massive load efficiently. Docker cannot fix an application that wasn’t designed to scale.

If your application performance is limited by a single database server, message queue, or external API, running hundreds of identical Docker containers for your application frontend won’t help. Inefficient algorithms or code that causes high CPU/memory usage will still be inefficient inside a container. Scaling might just mean multiplying the inefficiency.

Docker only provides uniform, easy-to-handle containers. You still need to invest heavily in scalable application design and infrastructure resources to build a large system. Docker is an essential foundation but it doesn’t solve scaling on its own.

2

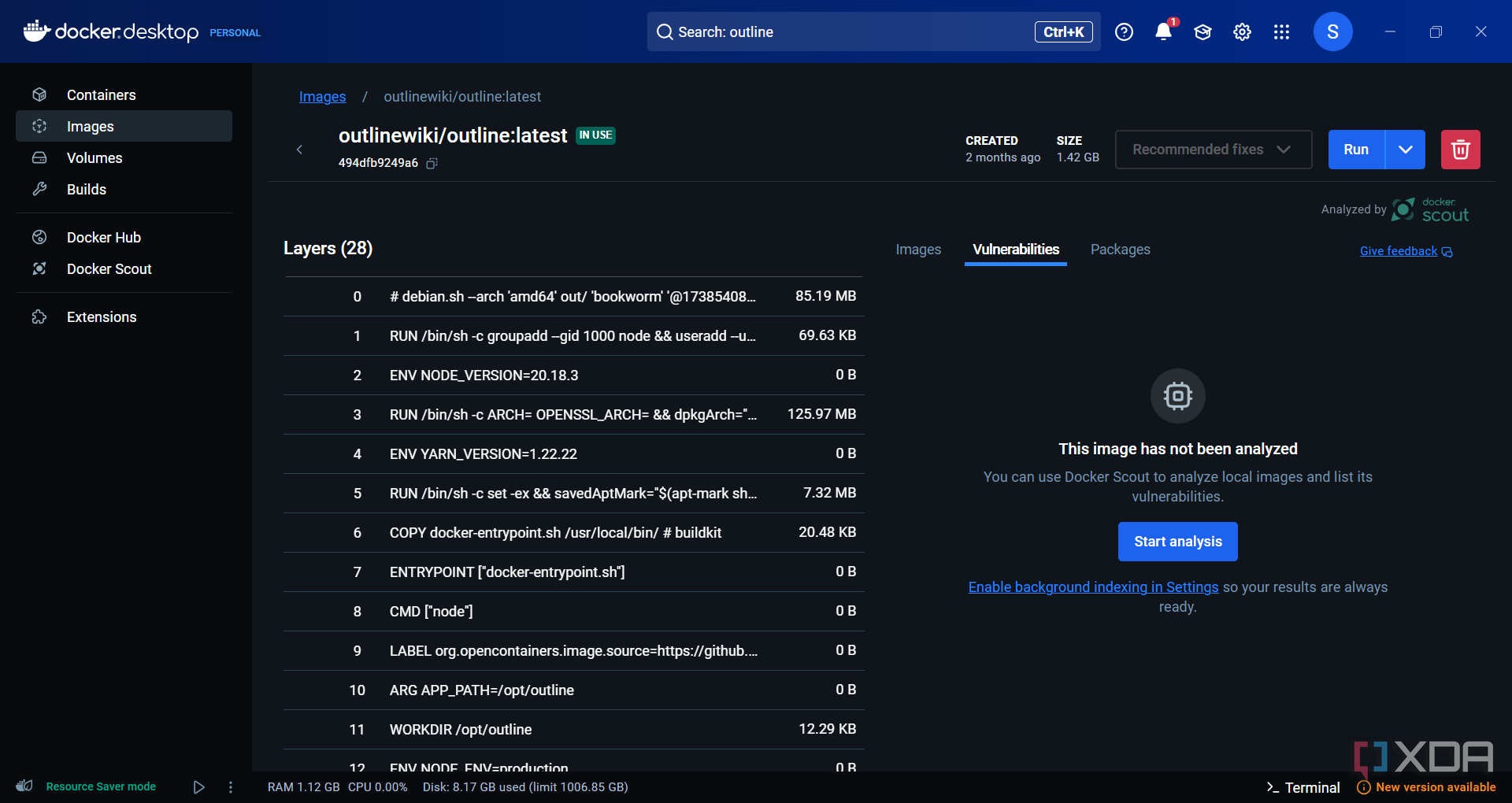

Docker desktop is the only way to run Docker on Mac or Windows (and it’s always free)

For a long time after its release, Docker Desktop was indeed free for almost all users. It was one of the reasons behind its popularity. While Docker is free for personal use, you need to sign up for one of the subscription plans if you want to unlock Docker Build Cloud, debug, quick support, and more.

Also, despite its convenience, Docker Desktop is not the only way to run Docker containers or interact with the Docker Engine on macOS and Windows. For example, on Windows 10 and 11, you can enable Windows Subsystem for Linux 2, install a Linux distribution, and install the standard Docker engine directly within that Linux environment. Of course, it’s not the most user-friendly approach to get the job done.

1

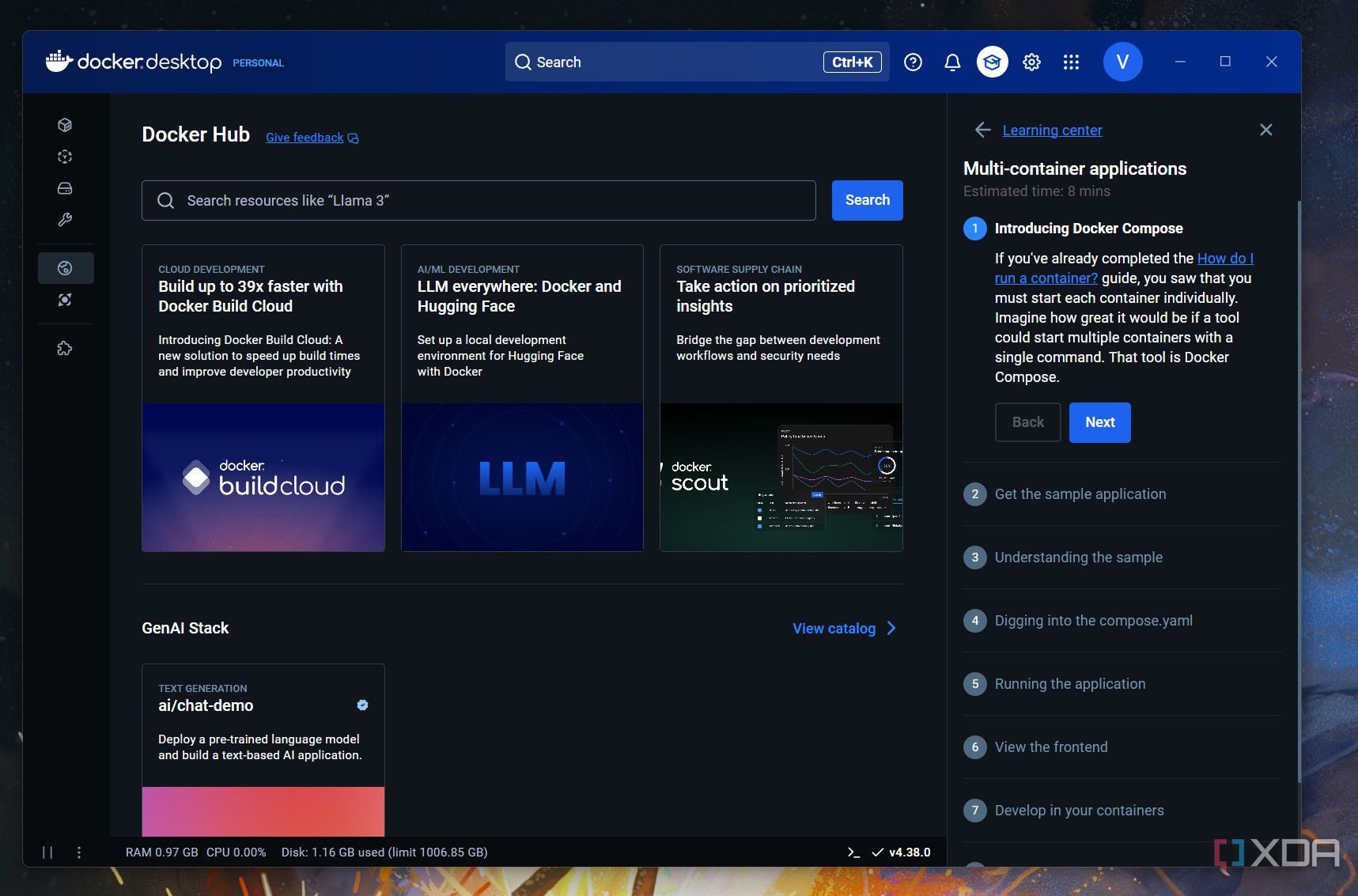

Docker is dying because of the competition

Despite the changes and the rise of alternatives, Docker is far from dead. Its influence and usage remain massive. For most developers starting with containers or working locally, Docker provides the most familiar, well-documented, and arguably smoothest experience for building, running, and testing containers.

Docker Hub is still the world’s largest and most commonly used container image registry. Millions of developers pull images from it daily. The container ecosystem has matured significantly with tools like Kubernetes, Podman, and more; Docker still remains a crucial part of this ecosystem.

The truth about Docker

So there you have it – some of the most persistent Docker myths debunked. Whether it’s confusing containers with VMs, fearing insecurity, or misjudging it for developers only, these misconceptions may hold you back. Move past these myths and harness Docker’s potential to streamline your development workflows. Meanwhile, check out our dedicated post if you are looking for some Docker containers for your home lab.