Back in the day, ESXi was all the craze in the home lab community, as it provided several enterprise-grade virtualization features without forcing users to pay a dime. However, the free version of the platform was discontinued after Broadcom took over VMware, forcing server enthusiasts to seek greener, open-source pastures for their home lab workloads.

For months, Proxmox served as a safe haven after many home labbers flocked to its feature-laden ecosystem. But in a twist of fate, Broadcom reinstated the free version of ESXi with version 8.0U3e, providing casual users the option to switch back to the VMware suite without expending thousands of dollars in licenses. While it’s undoubtedly great news for fans of ESXi, and I’d probably run it in my home lab at some point just to test it out, I’m satisfied with my Proxmox workstation and would rather not migrate to the former again.

Related

5 of the best upgrades for your home server PC

Level up your home server’s capabilities by arming it with these useful components

4

ESXi is rife with network driver issues

Good luck running it on your old system

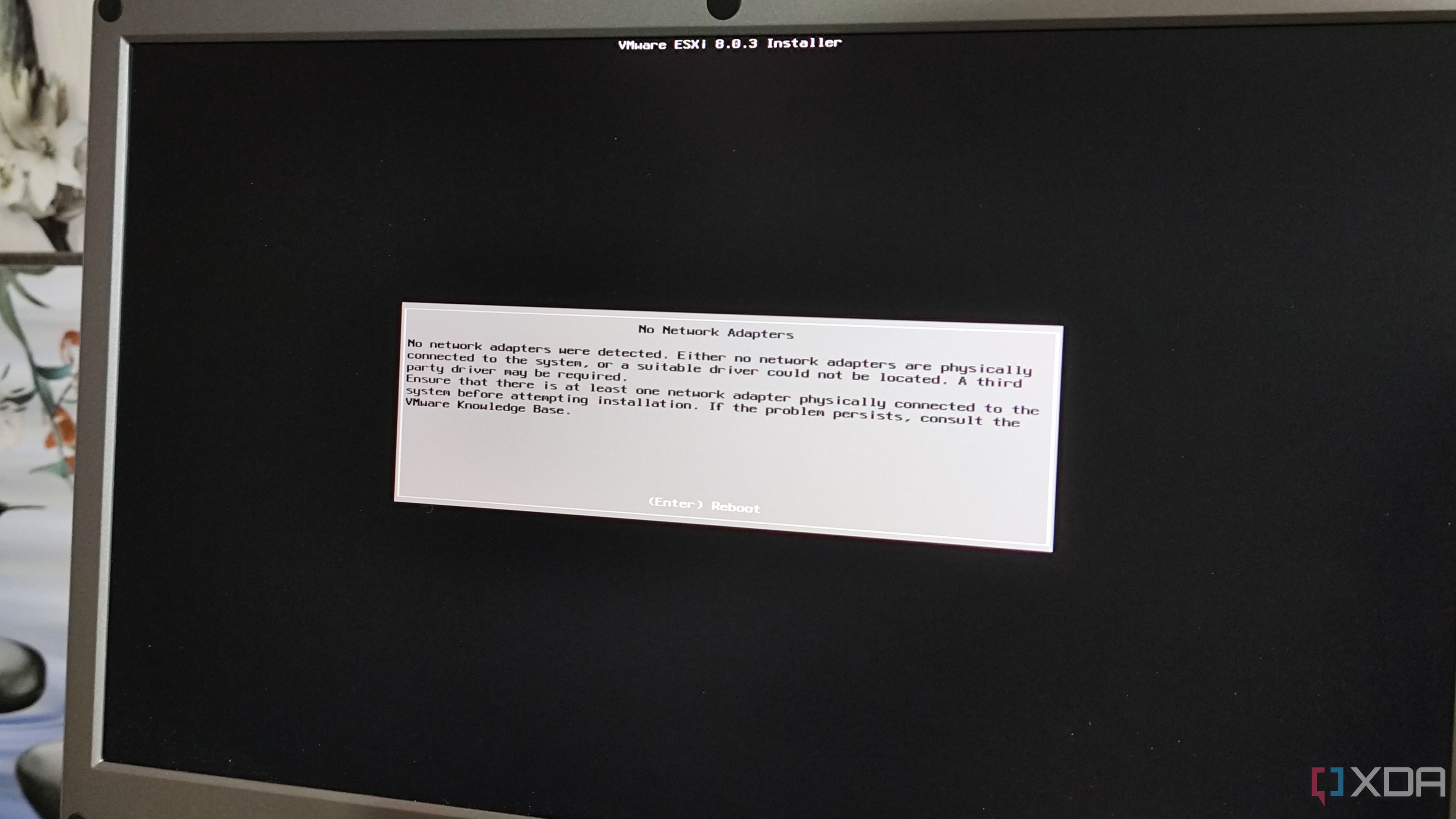

Since I’ve got my old PC gathering dust in my rat’s nest of a home lab, I figured I could outfit it with ESXi while working on my Proxmox server in the meantime. Unfortunately, the platform refused to work on my system because ESXi failed to detect a proper network adapter. Now, I’ll admit that the ASRock B550 Phantom Gaming motherboard in the system is far from new. But considering that it’s the first system I used to set up Proxmox, I was a bit miffed with the compatibility issues.

Unfortunately, troubleshooting the error led me on a wild goose chase. Switching to my TP-Link TX401 10G NIC did nothing to resolve the issue, and my USB-to-Ethernet adapter was just as useless. After that, I disabled the option to boot into the proprietary TOS on my TerraMaster F8 SSD Plus and tried using it as the ESXi host machine – only to run into the network issue again. Since desperate times call for desperate measures, I repeated the same process on my beloved F4-424 Max NAS, which resulted in the same issue again. But since I’d come this far, I removed the Proxmox boot drive from my enterprise-grade dual Xeon server, plugged the Ethernet cable into its built-in network card, and fired up ESXi. Lo and behold, it still showed the no network adapters detected error!

In stark contrast, Proxmox has a fairly simple installation process and is so lightweight that you can even set it up on a mere Intel N100 system. Considering that my only options are grabbing a new network card or preparing a custom ISO containing the correct NIC drivers, I’d rather stay on Proxmox.

3

Ability to allocate over 8 v-cores to VMs

It’s especially useful for server PC owners

As if the setup process for ESXi wasn’t painful enough, the fact that I could allocate a maximum of 8 virtual cores to a VM was just icing on the terrible cake. Let’s say you’re using a consumer-grade 6-core, 12-thread processor. That gives you 12 virtual cores for your home server. While 8 v-cores are more than enough for the average Linux distribution, using 10 should result in better performance on Windows VMs. That’s before we dive into the overprovisioning rabbit hole.

If you’re the proud owner of a server system with as many as 16 cores and 32 threads, you’ll want to have more leeway in allocating virtual CPUs to your virtual machines. Throw in dual processor setups, and it’s easy to see why the 8 v-core limit won’t cut it for your hardcore projects. Luckily, Proxmox is free from the v-core limitations of ESXi, so you’re free to grant 32 virtual CPUs to your macOS virtual machine if you want to enhance its performance.

2

Support for clusters

Unlike ESXi’s paid-only clustering facility

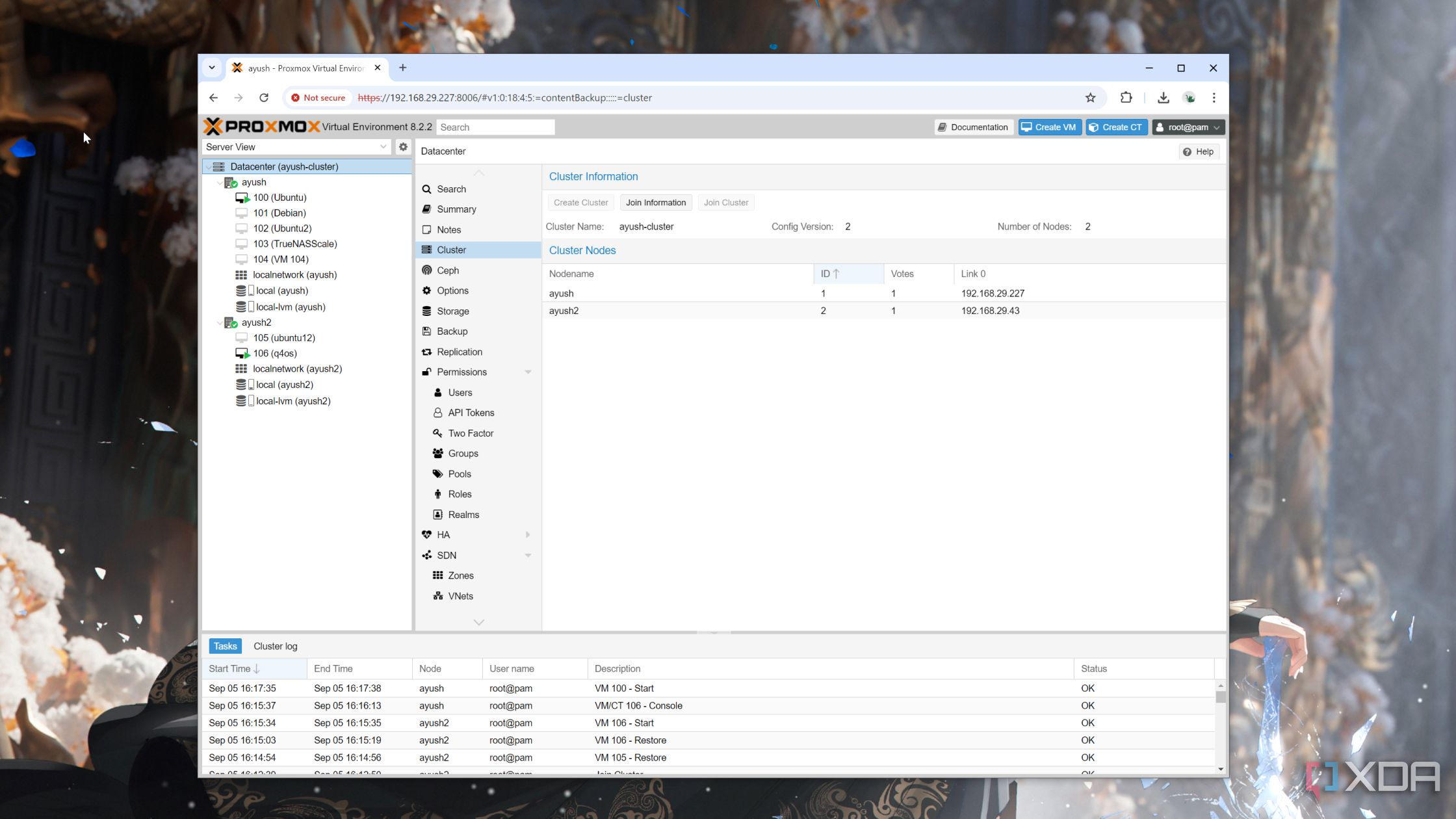

While we’re on the subject of production-ready servers, building a cluster of workstations has several perks. Not only can you manage your virtual machines and container fleet from a unified interface, but you can also start dabbling in high-availability setups. Whether you’re a tinkerer who loves experimenting with wacky services or a small business owner managing your locally-hosted apps, a high-availability cluster can ensure your essential software suite remains operational even when a node or two go offline.

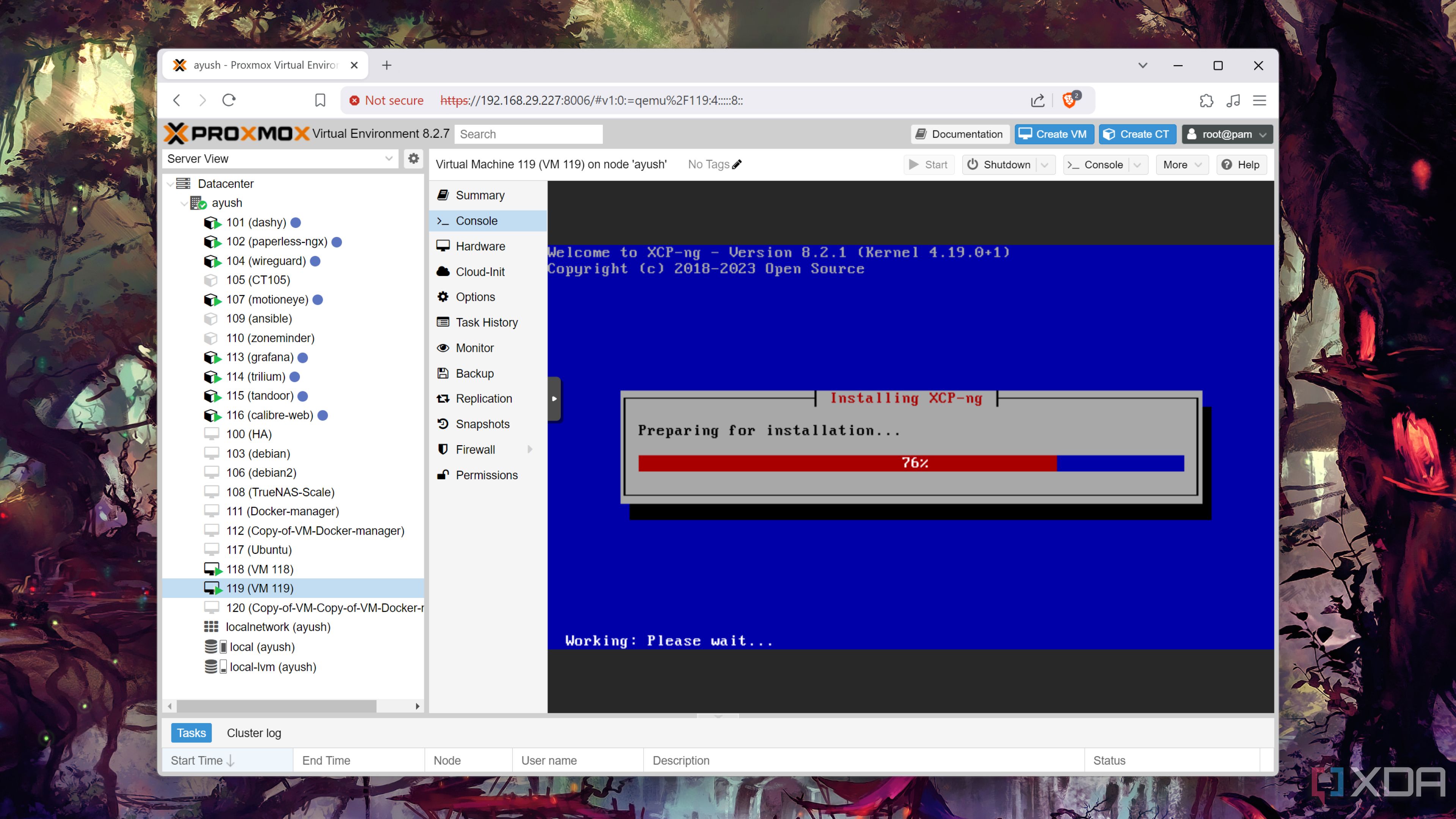

Unfortunately, ESXi’s cluster provisions aren’t available on the free version, so you’ll have to subscribe to an expensive license for a high-availability computing lab. Although I find XCP-ng and Harvester’s clustering features better than what we have in Proxmox, you can still deploy robust high-availability PVE workstations without spending extra on licenses.

1

No fear of the free license getting revoked

Once bitten, twice shy

The discontinuation of the free ESXi release in 2024 is one of the most anti-consumer moves I’ve seen in recent times, especially considering how a major chunk of the home lab community relied on the platform for their virtualization tasks. The free version of ESXi currently features a non-expiring embedded license, but considering Broadcom’s track record, I’d rather not deal with another scenario where the company revokes the free license with no rhyme or reason.

Meanwhile, Proxmox doesn’t lock production-tier features behind a paywall, and the enterprise repository and first-party support are the only facilities you’re missing out on with the free version. Of course, I’d be blind if I didn’t consider that a sudden takeover could result in Proxmox changing the current pricing model. But given that the platform has been around for well over a decade without major changes to its business model, I’d rather trust Proxmox instead of a firm that suddenly gets rid of its free utility before bringing it back out of nowhere.

Proxmox or ESXi: Which one do you prefer?

While I may sound bitter about ESXi, there’s no denying that the platform has its perks. If you’re pursuing a career in DevOps and want an industry-grade utility for your experiments, ESXi is the better option, as several firms rely on it. It’s also deeply integrated into the VMware ecosystem, which is better for professionals and sysadmin enthusiasts.

But if you’re a casual home labber, you won’t regret anything by staying on Proxmox. Me? Since I’m interested in DevOps, I’d probably use nested virtualization to run it on my Proxmox server at some point (and laugh like a cartoon villain while doing so).

Related

3 reasons why you should set up nested virtualization on your home lab

Why run a single server when you can create fully-breakable virtual home labs on top of your existing setup?